As a Databricks partner dedicated to creating high quality data and AI solutions, Qubika was proud to participate in last week’s 2024 Data + AI Summit. The event showcased Databricks’ commitment to advancing the lakehouse approach, revealing a host of features and tools designed to empower organizations to build and deploy ever-more mature AI solutions.

The summit clearly unveiled a near future where data lakehouses become the foundation of core enterprise AI applications.

Here are my five key takeaways from the event.

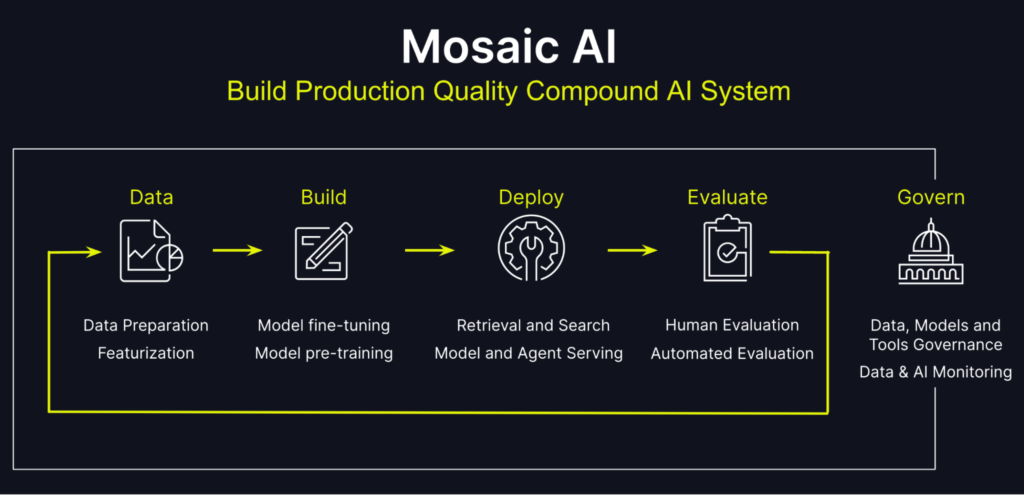

1. Compound AI systems: How Databricks is simplifying the process of building GenAI.

The highlight of the summit was the unveiling of Databricks’ vision for compound AI systems – and how their Mosaic AI platform is enabling organizations to build and deploy more complex and more robust GenAI systems that incorporate multiple components. For example, a typical GenAI system may involve a tuned model, a data retrieval tool, a reasoning agent, and more. It’s now possible to incorporate these different elements within a single platform. In turn this makes it easier to manage and govern the AI system – and also switch between different systems. It also ensures increased data security.

2. Business intelligence AI: Empowering business users with AI-driven insights

Databricks’ integration of BI AI capabilities directly into the platform is a significant step towards ensuring that every individual at an organization has access to data-based insights. By embedding AI-powered tools into the lakehouse, Databricks is enabling business users to explore data and generate insights without having to rely on technical expertise. This democratization of access to data is something that BI vendors and organizations have been trying to achieve for years – this effort by Databricks looks the real deal, and I expect it to become a key force in creating and improving organizations’ data-driven cultures.

3. Lakehouse advancements: The fuel for the upcoming AI revolution

The summit underscored Databricks’ dedication to enhancing the lakehouse architecture, the foundation upon which businesses such as Qubika are building AI solutions. They showcased advancements in data ingestion, processing, and governance, making it easier than ever to manage and harness diverse data assets within a unified, scalable platform.

4. LakeFlow: Simplifying data pipelines for AI workloads

A further personal highlight of the event was seeing the introduction of LakeFlow, a data movement and transformation framework. There are 3 main areas here:

- Much easier native data ingestions for SaaS applications. With LakeFlow Connect it’s now possible to integrate Databricks with various SaaS applications, such as your CRM system.

- Lakeflow pipelines to streamline ELT processes. This simplified approach reduces the complexity of data engineering, enabling teams to focus on developing and deploying AI models. Here at Qubika we use a refined ELT process to efficiently transform disorganized data into structured, valuable assets – and we’ve seen just how important this is to creating a solid foundation for LLMs and other AI systems.

- LakeFlow Orchestrator to reduce the need for external tools like Airflow. The introduction of Lakeflow Orchestrator, a native orchestrator designed to replace external tools like Airflow, streamlines the management of complex AI pipelines. Additionally, enhancements to Delta Sharing enable seamless data sharing across platforms, fostering collaboration and accelerating the development of AI solutions.

5. Spark real-time mode: Real-time AI in action

The debut of Spark’s real-time mode is a breakthrough for applications that demand real-time AI insights. By eliminating microbatching, Spark now delivers real-time processing capabilities, empowering organizations to respond to events and data changes instantaneously. This opens up new possibilities for use cases such as real-time personalization for customers, fraud detection, or a real-time dashboard for internal users. At Qubika we’ve seen the importance of having real-time, or at least close to real-time, responses for AI systems to work effectively and deliver timely and accurate insights.

Qubika’s perspective

As a Databricks Consulting Partner that has designed data transformation and migration strategies for many clients, we’re excited by the potential of these advancements to further enhance how organizations build and deploy AI solutions on the lakehouse platform. At the event it felt like several years of gradual advancements have finally come together to provide the basis for fundamental change in the development of AI systems.

We’re passionate about helping our clients harness the power of Databricks to drive innovation and achieve their AI-driven transformation goals – check out the slides below with a couple of case study examples of our work.

Qubika-and-Databricks-Partnership