Introduction

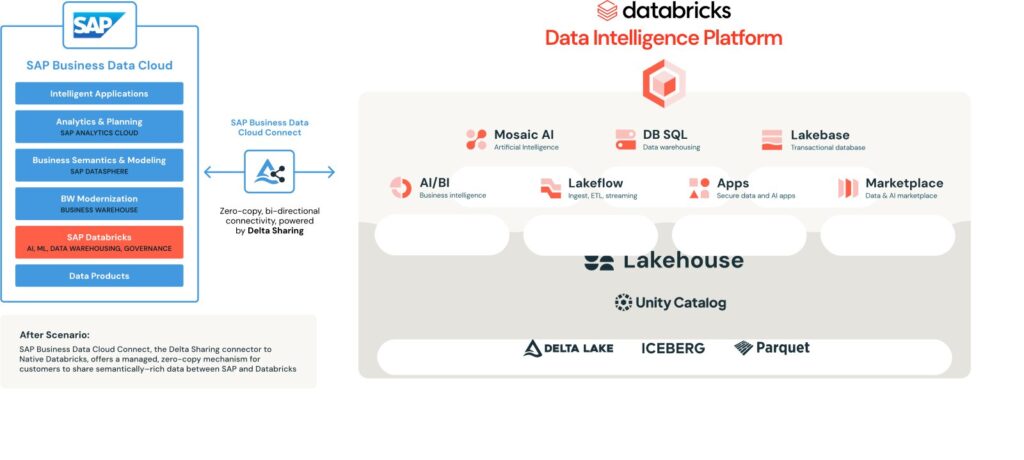

SAP and Databricks have partnered to bridge the gap between SAP’s enterprise data and modern analytics/AI platforms. The result is SAP Business Data Cloud (BDC) Connect for Databricks, a new integration that lets businesses seamlessly share data between SAP’s ecosystem and the Databricks Lakehouse with minimal effort. This connector addresses long-standing challenges in blending SAP data (often siloed in ERP, HR, procurement systems, etc.) with other enterprise data for analytics.

Traditionally, companies had to rely on brittle ETL pipelines, manual exports, or data duplication, which introduced governance risks, silos, high maintenance cost, and loss of the business context that SAP data carries. BDC Connect eliminates these pain points by enabling live, zero-copy data sharing: SAP data can be accessed in Databricks without extracting it out of SAP’s cloud, and vice versa, maintaining a single source of truth with full business context.

From a business perspective, this integration is transformative. It allows organizations to accelerate AI and BI initiatives using trusted SAP data combined with non-SAP data on a unified platform. For example, supply chain or finance teams can run advanced analytics or AI models on Databricks directly using SAP ERP data enriched with other sources, all while preserving SAP’s semantic definitions (so everyone speaks the same “business language”). By closing the “context gap” between SAP transactional data and analytics, companies can make more informed decisions and leverage AI with greater accuracy and relevance. In a previous post, “Integrating SAP On-Premise with Databricks: How to Unlock AI Before Migrating to the Cloud“ we explored how to integrate legacy, on-premise SAP systems with Databricks to prepare for this evolution. This article focuses on the next stage: SAP in the cloud, and how to take full advantage of the native integration.

Key Features of the BDC–Databricks Connector

The SAP BDC Connector for Databricks introduces several powerful capabilities that cater to both IT and business users:

-

Zero-Copy, Live Data Sharing: Leverages Databricks’ open Delta Sharing protocol to enable live access to data without copying or moving it out of SAP’s environment. Data remains in the SAP Business Data Cloud’s storage, and Databricks queries it in place, eliminating duplicated datasets and ensuring consistent governance. This live linkage means no more nightly extracts or stale data – Databricks can query SAP data products in real-time.

-

Bi-Directional Integration: The connector supports secure data sharing in both directions. SAP data products (curated datasets from SAP applications) can be exposed to Databricks for analysis, and conversely Databricks data or AI results can be shared back into SAP BDC. This bi-directional flow lets organizations enrich SAP’s analytics with external data and also bring advanced analytics results (e.g. machine learning predictions) into SAP’s sphere for operational use. All without manual file transfers.

-

Preservation of Business Semantics: SAP BDC provides curated “Data Products,” which are essentially business-friendly datasets (e.g. a unified “Financial Actuals” table rather than dozens of raw SAP tables) with consistent fields and definitions managed by SAP. By sharing these data products to Databricks, the full business context and semantic metadata is preserved. Analysts and data scientists can thus work with SAP data in Databricks that retains meaningful names and relationships (as defined in SAP), avoiding the ambiguity that often comes with raw data dumps.

-

Unified Governance and Security: The integration is built with enterprise security in mind. It uses mutual TLS (mTLS) and OpenID Connect (OAuth) for authentication between SAP and Databricks, ensuring a secure, trusted connection. On the Databricks side, all shared data is governed through Unity Catalog, so administrators can apply fine-grained access controls and auditing just like for any other data. This unified governance means that even though data is flowing across platforms, it remains compliant and traceable.

-

Minimal Data Engineering Overhead: BDC Connect is designed to be simple and “click-driven” rather than a complex integration project. Setting up the connection involves only a few steps in each platform’s interface, with no custom ETL code needed. Once connected, SAP data appears in Databricks as Delta tables that teams can query immediately, and new data products can be shared with a single click from SAP’s side. This ease-of-use means faster time-to-value – data teams can focus on analysis and modeling instead of pipeline maintenance.

Together, these features allow enterprises to treat Databricks as an extension of their SAP data estate – effectively an “operating system” for all data – while SAP Business Data Cloud continues to ensure the data’s trustworthiness and business context.

Architecture Overview%203.56.34%E2%80%AFp.%C2%A0m..png&size=182967&width=758&height=317&alt=)

At a high level, SAP Business Data Cloud Connect for Databricks links the SAP data fabric with the Databricks Lakehouse using Delta Sharing as the bridge. In the SAP BDC environment, enterprise data from SAP systems (e.g. S/4HANA, SAP SuccessFactors, SAP Ariba, etc.) is curated into “Data Products”. These are semantically rich, analytics-ready views of the data managed by SAP and stored in the BDC data lake (object store). On the other side, Databricks operates on an open Lakehouse architecture with Delta Lake tables and Unity Catalog for governance. BDC Connect essentially establishes a trust relationship between SAP BDC and a Databricks workspace, enabling each side to see the other’s data through Delta Sharing without physically moving files.

In practice, an SAP BDC administrator can publish or “share” a chosen data product (say, a Sales Orders dataset) to Databricks via the BDC Connect interface. Under the hood, this makes SAP BDC act as a Delta Sharing Provider, where the data product’s data (stored in parquet/Delta format in SAP’s cloud storage) is made available through a secure share endpoint. On the Databricks side, a Databricks Unity Catalog admin creates a Delta Sharing Recipient that corresponds to the SAP BDC connection.

Once the linkage is approved on both sides (SAP and Databricks), the shared SAP data can be mounted in Databricks as a table in a Unity Catalog catalog (using Databricks’ “Create catalog from share” functionality). This mount is a logical reference – the data still lives in SAP’s storage, but Databricks can query it live. Data scientists or analysts can then run SQL queries, notebooks, or ML models on these SAP tables in Databricks, joining them with other non-SAP data as needed, all in real time. Crucially, the SAP data retains its business-defined schema and comes with the latest values (since it’s not a stale extract).

For the reverse direction, Databricks can also share data back to SAP. This means a table or view created in Databricks (for example, a machine learning insights table or a merged dataset combining SAP and external data) can be exposed via Delta Sharing to SAP BDC. SAP BDC, through BDC Connect, can accept that incoming share and make it available in the SAP environment . For instance, to be consumed in SAP Datasphere or SAP Analytics Cloud for reporting. This bi-directional loop enables unified analytics: an SAP Analytics Cloud dashboard could seamlessly report on data that was partially prepared in Databricks, and an AI model in Databricks could be trained on live SAP data and feed results back into an SAP application scenario. Both sides use the open Delta Sharing protocol, so the data exchange is technology-agnostic and doesn’t require custom adapters.

Security and governance are enforced throughout this architecture. The connection setup involves exchanging secure tokens/credentials between the platforms: a Databricks admin generates a secure connection identifier which the SAP admin uses to create a Third-Party Connection in BDC (this establishes the trust). The data itself is shared over HTTPS with mutual TLS, and access is only granted to the specific tables the admin shared. On Databricks, any user accessing the SAP-shared data needs appropriate Unity Catalog permissions, and all access is audited. From SAP’s perspective, any data coming from Databricks can be governed in BDC’s catalog as well.

It’s worth noting that with an external Databricks (brownfield) integration, SAP BDC does not automatically expose all data – administrators explicitly choose which data products to share with Databricks. This differs from the embedded SAP Databricks (greenfield) scenario, where the curated data products are readily available internally. In the brownfield case, you maintain control over what leaves the SAP cloud. Both SAP-managed Data Products and custom Data Products (from SAP Datasphere’s object store) are supported in sharing, meaning if you have extended the SAP models or added custom datasets in BDC, those can be shared as well.

Overall, the architecture ensures a “single version of the truth” – SAP data remains governed in SAP’s cloud, but is virtually accessible in Databricks for combined analytics, and vice versa, forming a tightly integrated data fabric across the two platforms.

Compatibility and Roadmap

The SAP BDC–Databricks connector has evolved rapidly, and its availability has expanded over 2025. Below is a brief roadmap of its rollout and related capabilities:

-

Early 2025 (Preview/Initial Release): SAP Business Data Cloud was introduced as SAP’s next-generation data platform (successor to SAP Datasphere) around Q1 2025. It initially launched on AWS with SAP Databricks (embedded) included as a component. At this stage, “greenfield” integration was the focus: customers could use the Databricks engine provided inside BDC, but connecting an existing external Databricks workspace (a brownfield scenario) was not yet generally available. SAP’s strategy and partnership with Databricks were clear – Delta Sharing would be used for open data integration – and early pilot customers began testing the connector in a preview capacity. During this period, SAP BDC was only offered to RISE with SAP (private cloud edition) S/4HANA customers, and support for other deployments (e.g. on-prem or public cloud S/4HANA) was still on the roadmap.

-

Mid 2025: As SAP BDC matured, the Databricks external connector entered public preview for select customers. Feedback from these trials was positive, and SAP iterated on the integration. By mid-year, SAP also expanded BDC’s availability to Azure (since the initial launch was on AWS) – indeed, SAP BDC on Azure was targeted for second half of 2025. This implied that the Databricks connector needed to support Azure Databricks as well. Internal tests and partner insights at this time showed that the connector significantly simplified SAP–Databricks data scenarios, allowing, for example, SAP’s Insight Apps (pre-built analytics applications in BDC) to feed data to Databricks and leverage its AI capabilities. SAP also indicated plans to eventually include currently unsupported data like custom Z-tables from SAP systems into BDC (and thus into the sharing framework) in later phases, further increasing the connector’s utility.

-

September 2025 (General Availability): The SAP Business Data Cloud Connector for Databricks became generally available across all major cloud platforms by the end of September 2025. Databricks officially announced this GA on October 6, 2025, highlighting that customers on AWS, Azure, and GCP Databricks can now all use the connector. The GA release delivers full bi-directional, live data sharing between SAP and Databricks with Delta Sharing, as described earlier. It marked a significant milestone, effectively allowing any existing Databricks customer (with Unity Catalog) to link up with their SAP BDC tenant in a supported, production-ready manner. Alongside GA, SAP and Databricks showcased this capability at events like SAP TechEd and SAP Connect 2025 with live demos, underlining the real-world business impact (Accenture, Deloitte, and other partners shared success stories of blending SAP data into AI projects on Databricks).

-

Late 2025 and Beyond: SAP is expanding the “open data ecosystem.” The BDC Connect framework is not limited to Databricks – SAP announced that Google’s data platform is another early partner, with a similar connector in public preview by late 2025. This likely refers to integration with Google BigQuery or Google Cloud environments (enabling live sharing of SAP data to Google’s analytics cloud in a Delta Sharing or similar manner). As SAP BDC continues to evolve, we expect more connectors (possibly for other Lakehouse platforms or data clouds) to be introduced, all following the zero-copy, governed sharing model. On the SAP side, future updates will broaden support for source systems and content: e.g., including public cloud SAP ERP editions once available, and incorporating custom tables and more Insight Apps as data products. Essentially, the roadmap is about widening compatibility (more cloud targets, more data sources) and deepening functionality (more types of data and content that can be shared). SAP’s vision is to enable a true “data fabric” where no matter where data resides (in SAP or external), it can be harmonized and used with full business context. The GA of the Databricks connector is a big step in that direction, and ongoing enhancements will further close any gaps (for example, improving performance, adding automation for sharing setup, etc.) as more users adopt the solution.

Licensing and Technical Requirements

Licensing Considerations: SAP Business Data Cloud (BDC) is a separately licensed SaaS product in SAP’s portfolio. It is sold on a subscription basis measured in Capacity Units (CUs) per month (similar to how other SAP cloud services are licensed). This subscription essentially bundles the various components of BDC – including SAP-managed data products, SAP Analytics Cloud, and the embedded Databricks engine. Notably, if you use the SAP-provided Databricks (SAP Databricks) that comes with BDC, you do not need a separate Databricks license from Databricks Inc.; the usage is covered under your SAP BDC subscription. (In fact, SAP acts as the Databricks service provider in that scenario.) However, if you plan a brownfield integration with an existing Databricks workspace, you will continue to pay for your Databricks usage as per your cloud provider/Databricks contract on that side, in addition to the SAP BDC subscription for the SAP side. There is no extra SAP fee specifically for using the BDC Connector – as long as you have BDC, SAP allows you to share the data out to Databricks at no additional cost. The key point is that to leverage this connector, you must be an SAP BDC customer. Even if you already have a Databricks environment, you cannot access SAP’s curated data products through Delta Sharing unless you subscribe to BDC (which generates those data products and provides the BDC Connect service).

SAP currently requires BDC customers to license at least one “Insight App” (SAP’s pre-built analytical application content) as part of the subscription – this ensures that customers have a baseline use case for BDC.

In summary, from a licensing standpoint, BDC Connect for Databricks is included with the BDC platform (no separate license SKU for the connector itself), but it does necessitate that you maintain the appropriate SAP BDC subscription. Existing Databricks users should factor in that they will be paying for both BDC (to get the SAP data and integration) and their Databricks consumption (for the analytics side), but the value gained is the ability to combine those worlds with no heavy integration cost.

Technical Requirements: To deploy the SAP BDC–Databricks connector, there are a few technical prerequisites on each side:

-

SAP Side (Business Data Cloud): You need an active SAP BDC tenant provisioned. BDC currently is available for SAP S/4HANA (Rise private edition) customers (at the time of initial GA). Within BDC, your SAP data (from ERP and other sources) should be onboarded – typically through SAP Datasphere integration or SAP’s data replication services – and modeled into Data Products. The connector works at the data product level, so having the relevant data products published in the BDC catalog is a prerequisite. You also need an SAP BDC administrator user with rights to create Third-Party Connections. No additional hardware or installation is needed since BDC is SaaS; it’s more about configuration. Ensure that the BDC environment is on a supported region that can communicate with your Databricks workspace (generally internet connectivity is used via secure API endpoints).

-

Databricks Side: You must have a Unity Catalog–enabled Databricks workspace (on AWS, Azure, or GCP). Unity Catalog is required because the connector uses Delta Sharing, which in Databricks is managed through Unity Catalog’s sharing feature. If your workspace is not yet on Unity Catalog (i.e., still using only the legacy Hive metastore), you’ll need to enable Unity Catalog and attach clusters to it. You should also be on a relatively recent Databricks Runtime that supports Delta Sharing (Databricks Runtime 11.x or above, with UC – by 2025 this is standard). A Databricks account admin or workspace admin is required to set up the connection, as they need permissions to create a Delta Sharing Provider and Recipient. Delta Sharing should be configured for your account – this involves enabling open sharing and (for some clouds) setting up a token and recipient identifiers. The Databricks admin will generate a connection token (identifier) to be shared with the SAP admin during setup.

-

Networking and Security: The integration communicates over secure HTTPS. Databricks will call the SAP BDC Delta Sharing endpoint URL (which is an API endpoint given by SAP when the connection is established), and SAP BDC will call the Databricks sharing endpoint for the reverse share. There is no need for direct VPC network peering or VPN since the data sharing happens via REST APIs with encryption. However, you should ensure that your Databricks workspace’s outbound access is not restricted from reaching SAP’s endpoints. If there are firewall rules, they may need to allow the specific SAP BDC endpoint. Similarly, the SAP side must be able to reach the Databricks public sharing endpoints. The protocol uses short-lived secure tokens for data access, and mTLS as mentioned adds an extra layer of trust. Both sides will log the access for auditing (Databricks will even share some usage metrics back to SAP for billing transparency, such as how much SAP data was queried, per the documentation).

-

Setup Process: The setup is straightforward:

-

In Databricks, admin creates a Delta Sharing Provider (representing SAP BDC) or obtains a ready connection identifier/token.

-

In SAP BDC Cockpit, admin creates a Third-Party Connection for Databricks, pasting that identifier, and generates an invitation link or confirmation token.

-

Back in Databricks, admin completes the connection using the invitation from SAP (this finalizes the trust).

After this, the SAP data products that are shared will appear as external tables in Databricks, and Databricks shares that are granted to SAP will appear in the BDC catalog. It’s a one-time configuration – adding new data products to share later doesn’t require repeating the whole setup, just a share action on SAP side for each dataset.

-

In summary, technically the connector requires an up-to-date SAP BDC environment and a Unity Catalog Databricks workspace with internet connectivity. Most modern enterprises meeting the target use case (SAP ERP + Databricks usage) will be able to satisfy these requirements. SAP provides detailed guides to set up the connection, and because it’s mostly configuration, the time to enable this integration can be as short as a few hours once prerequisites are in place.

Conclusion

The SAP Business Data Cloud connector for Databricks represents a significant leap in SAP’s data strategy. It provides a deeply integrated yet open bridge between SAP’s world of structured, context-rich business data and the flexible, AI-ready world of Databricks and data lakehouses.

For businesses, this means data no longer needs to be stuck in silos. SAP data can flow securely to where advanced analytics happen, and insights can flow back into SAP, all in a governed, efficient manner. By leveraging open standards (Delta Lake format, Delta Sharing protocol) and preserving the meaning of the data, SAP and Databricks enable enterprise AI and BI that’s both powerful and trustworthy. The connector is already generally available and delivering value, early adopters report faster development of AI solutions and more autonomous decision-making capabilities, since AI agents can now work with real-time SAP data alongside other sources.

As SAP continues to enhance Business Data Cloud, we expect the integration with Databricks to grow even richer (supporting more scenarios and data types). In essence, SAP BDC Connect for Databricks is helping redefine what it means to have a unified data ecosystem: you get the best of SAP (business context, enterprise processes) and the best of Databricks (analytics scale, machine learning) in one synergistic environment. It’s a compelling example of an open data ecosystem unlocking business value from previously isolated data, paving the way for the intelligent, AI-powered enterprise that SAP envisions.